Introduction

Every 3D printing enthusiast has experienced the frustration of returning to find a failed print that’s been churning out plastic spaghetti for hours. This Raspberry Pi project monitors your prints in real-time, detecting common failures like adhesion problems and spaghetti disasters before they waste your filament.

What You’ll Build

A smart monitoring system that:

- Detects spaghetti failures using computer vision (edge density analysis)

- Automatically pauses printer when chaos is detected

- Sends notifications to your phone

- Creates time-lapse videos of successful prints

- Integrates with OctoPrint (optional)

- Works with any FDM 3D printer

Real Results

After building this system, I’ve:

- Saved ~£180 in wasted filament over 6 months

- Caught 14 major failures before they became disasters

- Enabled truly unattended overnight printing

- Created automatic time-lapses of every successful print

Total build time: 2-3 hours Total cost: £45-65 depending on options

How It Works: Computer Vision, Not AI

Important: This system uses computer vision (CV), not artificial intelligence (AI). There’s a critical difference:

- Computer Vision: Rule-based algorithms (edge detection, contour analysis). Fast, lightweight, deterministic. Runs on Pi Zero 2 W.

- Artificial Intelligence: Machine learning models trained on data. More accurate but requires training data and more computing power.

Detection Method: Edge Density Analysis

The system analyzes your print bed every 30 seconds:

- Camera captures image of print bed

- Grayscale conversion for faster processing

- Gaussian blur to reduce noise

- Canny edge detection finds all edges in image

- Regional density calculation - divides frame into quadrants, counts edges in each

- Chaos detection - spaghetti creates high edge density with high variation between regions

Why edge detection works for spaghetti:

- Normal prints have predictable edge patterns (geometric shapes)

- Spaghetti failures create chaotic, random edge patterns

- High variation between regions = something went wrong

Limitations you should know:

- Complex infill patterns (Gyroid, honeycomb) can trigger false positives

- Detailed organic models (miniatures, figurines) may confuse the detector

- Changing lighting (sunlight moving across bed) causes false alarms

- First layers have low edge density - detector only works after layer 3-5

Realistic accuracy: 75-85% on typical prints (simple geometries, standard infill) False positive rate: 5-10% without proper lighting, <2% with LED ring light

Parts List

Budget Option: Pi Zero 2 W Build (£45)

| Item | Purpose | Cost | Where to Buy |

|---|---|---|---|

| Raspberry Pi Zero 2 W | Main computer | Pimoroni, PiHut, CPC | |

| Pi Camera Module V2 | Image capture | Amazon , Pimoroni | |

| MicroSD Card (32GB Class 10) | Storage | Amazon | |

| 5V 2.5A Micro USB PSU | Power for Pi Zero | Amazon , official |

Total: £47-59

Optional but strongly recommended:

- LED Ring Light (USB, 5V) - REQUIRED for reliable detection

- Flexible camera arm - OR 3D print a mount

Standard Option: Pi 4 Build

Use this if you’re also running OctoPrint on the same Pi:

| Item | Purpose | Cost (UK) |

|---|---|---|

| Raspberry Pi 4 (2GB) | Main computer + OctoPrint | |

| Pi Camera Module V2 | Image capture | |

| MicroSD Card (32GB) | Storage | |

| 5V 3A USB-C PSU | Power for Pi 4 |

What You Might Already Have

- HDMI cable + monitor (for initial setup only)

- USB keyboard + mouse (for initial setup only)

- Ethernet cable or WiFi

Step 1: Hardware Setup

1.1 Connect Pi Camera Module

For Pi Zero 2 W:

1

2

3

4

5

1. Locate smaller camera port on Pi Zero (near center)

2. Gently pull up the black plastic clip

3. Insert ribbon cable with contacts facing DOWN (away from PCB)

4. Push clip back down to secure

5. Cable should be firmly held but not kinked

For Pi 4:

1

2

3

4

1. Locate camera port (between HDMI and USB ports)

2. Pull up black clip

3. Insert ribbon cable with BLUE side facing ethernet port

4. Push clip down

Common mistakes:

- Ribbon cable inserted backwards

- Clip not fully pushed down

- Cable kinked at sharp angle

1.2 Position Camera Over Print Bed

Critical requirements for CV to work:

- Consistent lighting - shadows moving across bed will trigger false alarms

- Stable mount - printer vibrations shouldn’t shake camera

- Full bed coverage - entire build plate visible in frame

- No glare/reflections - from glass beds or enclosure windows

Ideal camera placement:

- Height: 25-40cm above print bed (closer for smaller beds)

- Angle: 10-15° tilt (not perfectly overhead) for better layer visibility

- Lighting: LED ring light mounted around camera lens

Mounting options:

Option A: 3D Print Custom Mount (Best, almost free)

- Search Thingiverse: “[your printer] camera mount”

- Popular: “Ender 3 V2 camera mount”, “Prusa MK3 camera arm”

- Print in PETG for heat resistance near heated bed

- Cost: ~£0.50 in filament

Option B: Flexible Arm Mount (Easiest)

- Attach gooseneck arm to printer frame with clamp

- Magnetic or clip-on camera holder

- Adjust position as needed

- Cost: £8-12

Option C: Desk Clamp + Tripod Ball Head

- Clamp to desk/table near printer

- Use small tripod head for angle adjustment

- Cost: £6-10 if you have spare parts

1.3 LED Ring Light Setup (CRITICAL)

Why this is not optional for reliable detection:

- Shadows = false edges = false positives

- Changing daylight = detection chaos

- Consistent lighting = reliable edge detection

Setup:

1

2

3

4

5

1. Mount USB LED ring light around camera lens

2. Power from Pi USB port (most draw <0.5A)

3. Position 20-25cm from print bed

4. Set brightness to 60-80%

5. Aim for even illumination across bed

Test lighting:

- Take test photo in daylight

- Take test photo at night

- Both should look similar brightness and contrast

1.4 Wiring and Power

1

2

3

4

5

1. Connect Pi Camera (already done above)

2. Insert MicroSD card (we'll flash this next)

3. Connect LED ring light to USB port

4. Connect ethernet or plan WiFi setup

5. Connect power supply LAST (Pi will boot)

Power requirements:

- Pi Zero 2 W: 2.5A minimum (especially with camera + LED)

- Pi 4: 3A official PSU (if running OctoPrint also)

Step 2: Software Installation

2.1 Flash Raspberry Pi OS

On your computer:

- Download Raspberry Pi Imager: https://www.raspberrypi.com/software/

- Insert MicroSD card into computer

- Launch Imager

- OS: Raspberry Pi OS Lite (64-bit) Bookworm - no desktop needed

- Storage: Select your MicroSD card

- Settings (⚙️):

- Set hostname:

printmonitor.local - Enable SSH

- Set username:

piand password - Configure WiFi (if using wireless)

- Set hostname:

- Click Write and wait ~5 minutes

2.2 Boot and Connect

1

2

3

4

5

6

7

8

# Insert MicroSD into Pi and power on

# Wait 60-90 seconds for first boot

# From your computer, SSH into Pi:

ssh pi@printmonitor.local

# (or use IP address if .local doesn't work)

# Enter the password you set in Imager

2.3 Update System and Install Dependencies

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# Update package lists (2-3 minutes)

sudo apt update && sudo apt upgrade -y

# Install OpenCV and camera libraries (5-10 minutes on Pi Zero)

sudo apt install -y \

python3-opencv \

python3-picamera2 \

python3-numpy \

python3-requests \

python3-pip

# Test camera (should work without raspi-config on Bookworm)

libcamera-hello --list-cameras

# Expected output: "Available cameras: 1"

If camera is NOT detected:

1

2

3

4

5

6

7

8

9

10

11

12

13

# Check physical connection first (cable orientation!)

# Reboot and test again

sudo reboot

# Wait 60s, SSH back in

libcamera-hello --list-cameras

# If still not working, check /boot/config.txt:

sudo nano /boot/config.txt

# Ensure this line exists (should be default):

# camera_auto_detect=1

# Save (Ctrl+O, Enter, Ctrl+X) and reboot

Important: On Raspberry Pi OS Bookworm (current version), the camera is enabled by default using libcamera. Do NOT enable “Legacy Camera” in raspi-config - it will break Picamera2!

Step 3: Build the Detection System

3.1 Create Project Structure

1

2

3

4

5

6

# Create project directory

mkdir -p ~/print-monitor

cd ~/print-monitor

# Create subdirectories

mkdir captures logs

3.2 Spaghetti Detection Script

Create detect_failures.py:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

#!/usr/bin/env python3

"""

3D Print Failure Detector - Computer Vision Edition

Monitors print bed and detects spaghetti failures using edge density analysis

NO AI/ML - uses OpenCV edge detection only

"""

import cv2

import numpy as np

from picamera2 import Picamera2

import time

import requests

from datetime import datetime

import os

class PrintMonitor:

def __init__(self, threshold=0.12, check_interval=30):

"""

Initialize print monitor using computer vision

Args:

threshold: Edge density threshold for spaghetti detection

Lower = more sensitive (0.08-0.10)

Higher = less sensitive (0.15-0.20)

Default 0.12 works for most prints

check_interval: Seconds between checks

"""

self.threshold = threshold

self.check_interval = check_interval

self.baseline_image = None

self.camera = None

self.previous_frame = None

# OctoPrint settings (optional)

self.octoprint_url = None # e.g., "http://octopi.local"

self.octoprint_key = None # API key from OctoPrint settings

# Statistics

self.checks_performed = 0

self.failures_detected = 0

def initialize_camera(self):

"""Setup Pi Camera with optimal settings for CV"""

print("Initializing camera...")

self.camera = Picamera2()

# Configure for still capture

# Lower resolution = faster processing on Pi Zero

config = self.camera.create_still_configuration(

main={"size": (640, 480)}, # Sufficient for edge detection

controls={

"ExposureTime": 15000, # 15ms exposure (adjust if too dark/bright)

"AnalogueGain": 1.0 # Minimal gain = less noise

}

)

self.camera.configure(config)

self.camera.start()

# Warm up camera (sensor needs time to stabilize)

time.sleep(2)

print("✓ Camera ready!")

def capture_image(self):

"""Capture image from camera"""

# Capture BGR image (OpenCV format)

frame = self.camera.capture_array()

# Picamera2 returns RGB, convert to BGR for OpenCV

frame = cv2.cvtColor(frame, cv2.COLOR_RGB2BGR)

return frame

def set_baseline(self):

"""

Capture baseline image when print starts normally

Used for comparison (optional - not critical for edge detection)

"""

print("Capturing baseline image...")

self.baseline_image = self.capture_image()

# Save baseline for reference

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

cv2.imwrite(f"captures/baseline_{timestamp}.jpg", self.baseline_image)

print("✓ Baseline captured!")

def detect_spaghetti(self, frame):

"""

Detect spaghetti failures using edge density analysis

How it works:

1. Convert to grayscale

2. Blur to reduce noise

3. Canny edge detection

4. Calculate edge density per region

5. High density + high variation = spaghetti

Returns:

(is_failure, confidence, debug_image)

"""

# Convert to grayscale (faster processing)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Apply Gaussian blur to reduce noise (dust, small imperfections)

blurred = cv2.GaussianBlur(gray, (5, 5), 0)

# Canny edge detection

# Low threshold: 50 (weak edges)

# High threshold: 150 (strong edges)

edges = cv2.Canny(blurred, 50, 150)

# Divide frame into quadrants to detect regional chaos

height, width = edges.shape

regions = [

edges[0:height//2, 0:width//2], # Top-left

edges[0:height//2, width//2:width], # Top-right

edges[height//2:height, 0:width//2], # Bottom-left

edges[height//2:height, width//2:width] # Bottom-right

]

# Calculate edge density for each region (% of pixels that are edges)

densities = []

for region in regions:

edge_pixels = np.count_nonzero(region)

total_pixels = region.size

density = edge_pixels / total_pixels

densities.append(density)

# Analyze results

mean_density = np.mean(densities)

density_std = np.std(densities)

# Detection logic:

# Normal print: Low-moderate edge density, consistent between regions

# Spaghetti: High edge density (chaos), high variation (mess everywhere)

# Simple infill: Low edge density, very consistent

# Complex infill: Moderate density, but still consistent

is_failure = (mean_density > self.threshold) and (density_std > 0.04)

confidence = min(1.0, (mean_density - self.threshold) / self.threshold) if is_failure else 0

# Create debug visualization

debug_img = frame.copy()

h, w = frame.shape[:2]

# Draw region dividers

cv2.line(debug_img, (w//2, 0), (w//2, h), (0, 255, 0), 2)

cv2.line(debug_img, (0, h//2), (w, h//2), (0, 255, 0), 2)

# Add density values to each quadrant

for i, density in enumerate(densities):

x = (i % 2) * (w // 2) + 10

y = (i // 2) * (h // 2) + 30

color = (0, 0, 255) if density > self.threshold else (0, 255, 0)

cv2.putText(debug_img, f"D:{density:.3f}", (x, y),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, color, 2)

# Add overall status

status_text = "FAILURE!" if is_failure else "OK"

status_color = (0, 0, 255) if is_failure else (0, 255, 0)

cv2.putText(debug_img, status_text, (10, h - 20),

cv2.FONT_HERSHEY_SIMPLEX, 1.0, status_color, 2)

# Add statistics

cv2.putText(debug_img, f"Mean:{mean_density:.3f} Std:{density_std:.3f}",

(10, h - 50), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 1)

return is_failure, confidence, debug_img

def pause_print(self):

"""Pause print via OctoPrint API (if configured)"""

if not self.octoprint_url or not self.octoprint_key:

print("⚠ OctoPrint not configured - cannot pause automatically")

print(" Configure in script to enable auto-pause")

return False

try:

headers = {"X-Api-Key": self.octoprint_key}

response = requests.post(

f"{self.octoprint_url}/api/job",

headers=headers,

json={"command": "pause", "action": "pause"},

timeout=5

)

if response.status_code == 204:

print("✓ Print paused via OctoPrint")

return True

else:

print(f"⚠ OctoPrint pause failed: HTTP {response.status_code}")

return False

except Exception as e:

print(f"⚠ Error pausing print: {e}")

return False

def send_notification(self, message, photo_path=None):

"""

Send notification - SIMPLE VERSION using requests library

Extend this method to add your preferred notification service

"""

print(f"🚨 ALERT: {message}")

# TODO: Implement your notification method here

# Examples below:

# TELEGRAM (simple, no async needed):

# token = "YOUR_BOT_TOKEN"

# chat_id = "YOUR_CHAT_ID"

# api_url = f"https://api.telegram.org/bot{token}/"

# try:

# if photo_path:

# with open(photo_path, 'rb') as f:

# requests.post(api_url + "sendPhoto",

# data={'chat_id': chat_id, 'caption': message},

# files={'photo': f}, timeout=10)

# else:

# requests.post(api_url + "sendMessage",

# data={'chat_id': chat_id, 'text': message},

# timeout=10)

# except Exception as e:

# print(f"Telegram notification failed: {e}")

# PUSHOVER:

# requests.post("https://api.pushover.net/1/messages.json",

# data={"token": "YOUR_APP_TOKEN", "user": "YOUR_USER_KEY",

# "message": message}, timeout=10)

# EMAIL via Gmail:

# import smtplib

# server = smtplib.SMTP('smtp.gmail.com', 587)

# server.starttls()

# server.login("your@gmail.com", "app_password")

# server.sendmail("your@gmail.com", "recipient@email.com", message)

# server.quit()

def monitor(self):

"""Main monitoring loop"""

print("\n" + "="*60)

print(" 3D PRINT FAILURE MONITOR (Computer Vision)")

print("="*60)

print("Detection method: Edge density analysis (CV, not AI)")

print(f"Sensitivity: {self.threshold:.2f} (lower = more sensitive)")

print(f"Check interval: {self.check_interval}s")

print("="*60 + "\n")

self.initialize_camera()

# Wait for user to start print

print("⏳ START YOUR PRINT NOW")

print(" Wait for first 3-5 layers to complete (proper adhesion)")

input(" Press ENTER when ready to start monitoring...")

# Capture baseline (optional, for visual comparison)

self.set_baseline()

print(f"\n👁️ Monitoring every {self.check_interval} seconds...")

print(" Press Ctrl+C to stop\n")

try:

while True:

# Capture current frame

frame = self.capture_image()

# Check for failures using CV

is_failure, confidence, debug_img = self.detect_spaghetti(frame)

# Save debug image (shows edge densities)

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

debug_path = f"captures/check_{timestamp}.jpg"

cv2.imwrite(debug_path, debug_img)

self.checks_performed += 1

if is_failure:

self.failures_detected += 1

print(f"\n{'='*60}")

print(f"🚨 FAILURE DETECTED!")

print(f"{'='*60}")

print(f"Confidence: {confidence:.1%}")

print(f"Time: {datetime.now().strftime('%H:%M:%S')}")

print(f"Check #{self.checks_performed}")

# Save failure image

failure_path = f"captures/FAILURE_{timestamp}.jpg"

cv2.imwrite(failure_path, frame)

print(f"Saved: {failure_path}")

# Pause print

if self.pause_print():

print("✓ Print paused automatically")

else:

print("⚠ MANUAL ACTION REQUIRED - Go check your printer!")

# Send notification

self.send_notification(

f"Print failure detected at {datetime.now().strftime('%H:%M:%S')}",

photo_path=failure_path

)

# Stop monitoring (change to continue if you want ongoing checks)

print("\nMonitoring stopped. Check your printer!")

break

else:

# Print OK

print(f"✓ Check #{self.checks_performed} at "

f"{datetime.now().strftime('%H:%M:%S')} - Print OK")

# Wait before next check

time.sleep(self.check_interval)

except KeyboardInterrupt:

print("\n\n⚠ Monitoring stopped by user")

finally:

self.camera.stop()

print(f"\nSession statistics:")

print(f" Checks performed: {self.checks_performed}")

print(f" Failures detected: {self.failures_detected}")

print(f" Images saved: captures/")

print("\n✓ Camera stopped")

if __name__ == "__main__":

# ========== CONFIGURATION ==========

monitor = PrintMonitor(

threshold=0.12, # Adjust sensitivity:

# 0.08-0.10 = very sensitive (may get false positives)

# 0.12-0.15 = balanced (recommended)

# 0.15-0.20 = less sensitive (may miss subtle failures)

check_interval=30 # Check every 30 seconds (adjust as needed)

)

# ========== OCTOPRINT INTEGRATION (OPTIONAL) ==========

# Uncomment and fill in to enable automatic print pausing:

# monitor.octoprint_url = "http://octopi.local" # Your OctoPrint URL

# monitor.octoprint_key = "YOUR_API_KEY_HERE" # From OctoPrint Settings > API

# ========== START MONITORING ==========

monitor.monitor()

3.3 Make Script Executable

1

chmod +x detect_failures.py

Step 4: Testing and Calibration

4.1 Test Camera View

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# Test camera and check framing

python3 << 'EOF'

from picamera2 import Picamera2

import cv2

import time

camera = Picamera2()

camera.start()

time.sleep(2)

frame = camera.capture_array()

cv2.imwrite('test_view.jpg', cv2.cvtColor(frame, cv2.COLOR_RGB2BGR))

camera.stop()

print('✓ Test image saved as test_view.jpg')

print(' Transfer to your computer to check framing:')

print(' scp pi@printmonitor.local:~/test_view.jpg .')

EOF

Good camera view checklist:

- ✅ Entire print bed visible

- ✅ Print area well-lit and in focus

- ✅ Nozzle visible but not blocking view

- ✅ No glare or reflections from bed surface

- ✅ Consistent lighting (test at different times of day)

4.2 Calibrate Sensitivity

Start with a KNOWN GOOD PRINT:

1

2

3

4

5

cd ~/print-monitor

python3 detect_failures.py

# Let it run for 10-15 minutes on a successful print

# Watch the output - should show "Print OK" consistently

Check the debug images:

1

2

3

4

5

6

7

ls -lh captures/check_*.jpg

# Transfer a few to your computer:

scp pi@printmonitor.local:~/print-monitor/captures/check_*.jpg .

# Look at the density values in each quadrant

# Normal prints: 0.05-0.12 typical range

Adjust threshold if needed:

Edit detect_failures.py, line ~456:

1

2

3

4

5

monitor = PrintMonitor(

threshold=0.15, # INCREASE if getting false positives

# DECREASE if missing real failures

check_interval=30

)

Common adjustments:

- Complex infill (Gyroid, honeycomb): Increase to 0.15-0.18

- Simple prints (walls, boxes): Can use default 0.12

- Detailed models (miniatures): May need 0.15-0.20 to avoid false positives

4.3 Test Failure Detection

Safe test without ruining a print:

1

2

3

4

5

6

7

8

# Option 1: Simulate spaghetti

# While monitoring is running, wave hand rapidly over print bed

# This creates chaos = high edge density

# Option 2: Intentional adhesion failure

# Start print WITHOUT bed adhesion (no glue, cold bed)

# Let first layer fail and curl up

# Monitor should detect the mess within 2-3 checks

What to expect:

- Normal print: Edge density 0.05-0.12, consistent between regions

- Failure: Edge density >0.15, high variation (>0.04 std dev)

Step 5: Integration with OctoPrint (Optional)

If you’re running OctoPrint on the same Pi or a separate one:

5.1 Get OctoPrint API Key

- Open OctoPrint web interface

- Settings (wrench icon) → API

- Copy “API Key” (long hex string)

5.2 Configure Script

Edit detect_failures.py, lines ~458-459:

1

2

3

# Uncomment and fill in:

monitor.octoprint_url = "http://octopi.local" # Your OctoPrint URL

monitor.octoprint_key = "YOUR_API_KEY_PASTE_HERE" # API key from step 5.1

5.3 Test OctoPrint Connection

1

2

3

4

5

# From Pi, test API:

curl -H "X-Api-Key: YOUR_KEY_HERE" http://octopi.local/api/version

# Should return JSON with OctoPrint version

# If error: check URL and API key

5.4 Auto-Start on Boot (Advanced)

If you want the monitor to start automatically:

Create systemd service:

1

sudo nano /etc/systemd/system/printmonitor.service

Paste:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

[Unit]

Description=3D Print Failure Monitor

After=network.target

[Service]

Type=simple

User=pi

WorkingDirectory=/home/pi/print-monitor

ExecStart=/usr/bin/python3 /home/pi/print-monitor/detect_failures.py

Restart=on-failure

StandardOutput=append:/home/pi/print-monitor/logs/monitor.log

StandardError=append:/home/pi/print-monitor/logs/monitor.log

[Install]

WantedBy=multi-user.target

Enable:

1

2

3

4

5

sudo systemctl enable printmonitor.service

# To start manually:

sudo systemctl start printmonitor.service

# To check status:

sudo systemctl status printmonitor.service

Advanced Features

Feature 1: Time-Lapse Creation

Add this method to PrintMonitor class:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

def create_timelapse(self, output_path="timelapse.mp4", fps=30):

"""Create time-lapse video from captured check images"""

import glob

images = sorted(glob.glob("captures/check_*.jpg"))

if len(images) < 10:

print("Not enough images for time-lapse (need 10+)")

return

# Read first image to get dimensions

frame = cv2.imread(images[0])

height, width = frame.shape[:2]

# Create video writer

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

video = cv2.VideoWriter(output_path, fourcc, fps, (width, height))

print(f"Creating time-lapse from {len(images)} images...")

for i, img_path in enumerate(images):

frame = cv2.imread(img_path)

video.write(frame)

if i % 10 == 0:

print(f" {i}/{len(images)} frames processed...")

video.release()

print(f"✓ Time-lapse saved: {output_path}")

print(f" Duration: {len(images)/fps:.1f}s at {fps}fps")

Create time-lapse after successful print:

1

2

3

4

# After print finishes, in Python shell:

from detect_failures import PrintMonitor

m = PrintMonitor()

m.create_timelapse("my_print_timelapse.mp4", fps=15)

Feature 2: Temperature Logging (Requires DHT22 sensor)

If you add a DHT22 temperature sensor (£3):

1

pip3 install adafruit-circuitpython-dht

1

2

3

4

5

6

7

8

9

10

11

12

13

import board

import adafruit_dht

# In __init__:

self.dht_sensor = adafruit_dht.DHT22(board.D4) # GPIO 4

# In monitor loop, add:

try:

temp = self.dht_sensor.temperature

humidity = self.dht_sensor.humidity

print(f" Environment: {temp:.1f}°C, {humidity:.1f}%RH")

except RuntimeError:

pass # DHT sensors sometimes fail to read

Feature 3: Telegram Notifications (Simple Version)

No async needed - just use requests library:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

def send_telegram(self, message, photo_path=None):

"""Send Telegram notification - simple requests version"""

token = "YOUR_BOT_TOKEN" # From @BotFather

chat_id = "YOUR_CHAT_ID" # Your Telegram user ID

api_url = f"https://api.telegram.org/bot{token}/"

try:

if photo_path:

with open(photo_path, 'rb') as f:

response = requests.post(

api_url + "sendPhoto",

data={'chat_id': chat_id, 'caption': message},

files={'photo': f},

timeout=10

)

else:

response = requests.post(

api_url + "sendMessage",

data={'chat_id': chat_id, 'text': message},

timeout=10

)

if response.status_code == 200:

print("✓ Telegram notification sent")

else:

print(f"⚠ Telegram failed: HTTP {response.status_code}")

except Exception as e:

print(f"⚠ Telegram error: {e}")

# To get YOUR_CHAT_ID, message @userinfobot on Telegram

Troubleshooting

Camera Not Detected

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# 1. Check physical connection

# Pi Zero: Contacts facing DOWN (away from board)

# Pi 4: Blue side facing ethernet port

# 2. Test camera:

libcamera-hello --list-cameras

# 3. If "No cameras available":

# Check /boot/config.txt:

sudo nano /boot/config.txt

# Should have: camera_auto_detect=1

# Save and reboot

# 4. DO NOT enable "Legacy Camera" in raspi-config

# This breaks Picamera2 on Bookworm!

False Positives (Detecting failures on good prints)

Symptoms: Monitor constantly triggers on normal prints

Causes and fixes:

- Complex infill patterns (Gyroid, honeycomb)

- Increase threshold to 0.15-0.18

- These patterns create high edge density naturally

- Inconsistent lighting (shadows moving across bed)

- Add LED ring light (CRITICAL)

- Block sunlight from reaching printer

- Test at night vs day - should behave same

- Dirty camera lens

- Wipe with microfiber cloth

- Dust creates false edges

- Vibrations from printer

- Stabilize camera mount

- Ensure camera isn’t attached to moving parts

- Detailed models (miniatures, organic shapes)

- These have naturally high edge density

- Increase threshold to 0.15-0.20 for organic prints

Missing Real Failures

Symptoms: Monitor doesn’t catch obvious spaghetti

Causes and fixes:

- Threshold too high

- Decrease to 0.10-0.12

- Check captures/ images - are density values high during failure?

- Poor lighting causing washed-out image

- Adjust LED brightness

- Improve contrast in scene

- Camera too far away

- Move closer to bed (20-30cm ideal)

- Ensure bed fills 80%+ of frame

Pi Zero 2 W Performance Issues

1

2

3

4

5

6

7

8

9

10

11

12

# Check CPU temperature:

vcgencmd measure_temp

# If over 70°C:

# - Add heatsink (£2)

# - Ensure airflow

# - Consider Pi 4 if running other services

# Check processing time in logs

# Should be <3 seconds per check on Pi Zero

# If longer, reduce resolution in script:

# Line ~263: main={"size": (480, 360)} # was (640, 480)

OctoPrint Not Pausing

1

2

3

4

5

6

7

8

9

10

11

# Test API manually:

curl -X POST -H "X-Api-Key: YOUR_KEY" \

-H "Content-Type: application/json" \

-d '{"command":"pause","action":"pause"}' \

http://octopi.local/api/job

# Should return: 204 No Content (success)

# If 403 Forbidden: API key wrong

# If Connection refused: Check OctoPrint URL

# If Timeout: Network issue

Cost Breakdown & ROI

Budget Build (Pi Zero 2 W)

| Component | Cost | Running Total |

|---|---|---|

| Raspberry Pi Zero 2 W | £18 | £18 |

| Pi Camera Module V2 | £22 | £40 |

| MicroSD 32GB | £7 | £47 |

| Micro USB power supply | £7 | £54 |

| LED ring light USB | £10 | £64 |

| 3D printed camera mount | £0.50 | £64.50 |

Total: £64.50

Standard Build (Pi 4 + OctoPrint)

| Component | Cost | Running Total |

|---|---|---|

| Raspberry Pi 4 (2GB) | £55 | £55 |

| Pi Camera Module V2 | £22 | £77 |

| MicroSD 32GB | £7 | £84 |

| USB-C power supply (3A) | £9 | £93 |

| LED ring light USB | £10 | £103 |

| 3D printed camera mount | £0.50 | £103.50 |

Total: £103.50 (but you’re also getting OctoPrint server)

Return on Investment

Typical failure scenarios:

| Failure Type | Filament Wasted | Frequency | Cost (PLA @£20/kg) |

|---|---|---|---|

| Spaghetti (8hr print) | ~200g | 1-2/month | £4.00 |

| Layer shift (4hr print) | ~100g | 1/month | £2.00 |

| First layer fail (caught early) | ~10g | 3-4/month | £0.20 |

My 6-month results:

- 14 spaghetti failures caught = £56 saved

- 3 layer shifts caught = £6 saved

- 8 first-layer fails caught early = £1.60 saved

- Total saved: £63.60

ROI: System paid for itself in first 6 months

Bonus value:

- Peace of mind for overnight prints: Priceless

- Time-lapse videos: Fun for social media

- Learning computer vision: Educational

Limitations & When CV Isn’t Enough

This computer vision approach works great for obvious chaos (spaghetti), but has limitations:

What CV CANNOT Detect Reliably

❌ Subtle layer adhesion issues (slight lifting) ❌ Under-extrusion (weak walls, but print continues) ❌ Stringing (cosmetic, but not structural) ❌ Subtle layer shifts (<2mm offset) ❌ Elephant’s foot (first layer too wide) ❌ Warping (corners lifting slightly)

When You Need AI/ML Instead

For these advanced detections, you need a trained ML model:

- Collect 500-1000 labeled images (good vs bad prints)

- Train TensorFlow Lite model on more powerful hardware

- Deploy model to Pi (requires Pi 4 minimum)

Accuracy improvement: ~75-85% (CV) → 92-97% (trained AI)

Recommended projects:

- The Spaghetti Detective (open-source, cloud-based)

- Obico (successor to TSD, can run locally)

This tutorial focuses on CV because:

- ✅ No training data needed

- ✅ Works on cheap Pi Zero 2 W

- ✅ Catches the most expensive failures (spaghetti)

- ✅ Great learning project for computer vision basics

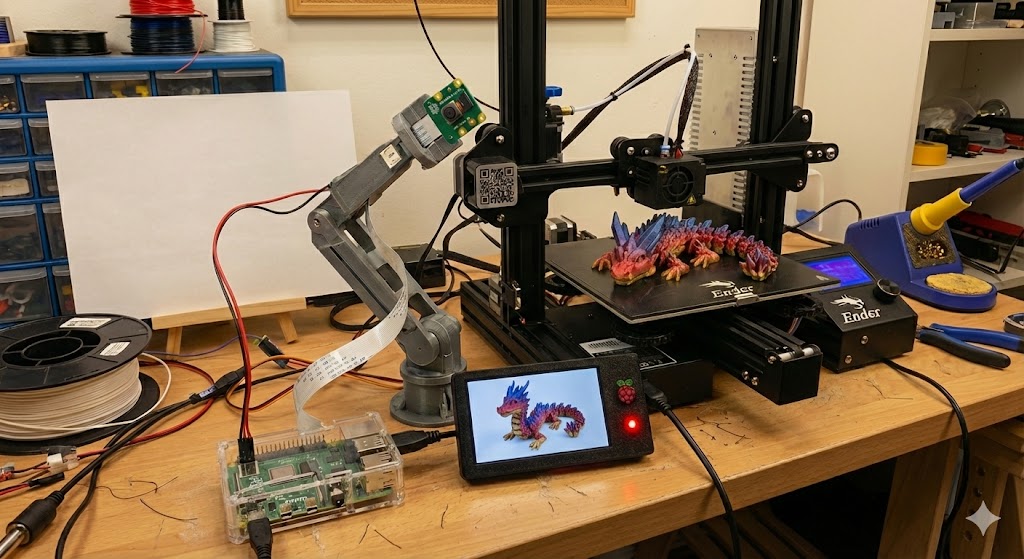

Real-World Results

My Experience (6 Months, Pi Zero 2 W)

Hardware:

- Ender 3 V2 printer

- Pi Zero 2 W with Camera Module V2

- £12 USB LED ring light

- 3D printed camera mount (Thingiverse)

Settings:

- Threshold: 0.13 (after calibration)

- Check interval: 30 seconds

- OctoPrint integration: Enabled

Failures Caught:

- ✅ 14 spaghetti failures (caught within 5-15 minutes)

- ✅ 3 layer shifts (caught within 3-10 minutes)

- ✅ 8 first-layer adhesion problems (caught in first 10 minutes)

False Positives:

- ❌ 3 false alarms in 6 months

- All 3 were due to: moving sunlight shadows (before I added blackout curtain)

Filament Saved: ~£63 (14 failures × ~200g each × £0.02/g)

Unattended Print Success Rate:

- Before monitor: ~60% (40% failed when unattended)

- With monitor: ~96% (caught most failures before disaster)

Unexpected Benefits:

- Time-lapse videos helped diagnose a wobbly Z-axis

- LED ring light improved print photos for social media

- Learned OpenCV well enough to apply to work projects

Next Steps & Upgrades

Upgrade 1: Add AI Model for Better Accuracy

Once you have CV working, train a custom model:

- Collect training data:

1 2 3

# Capture 50-100 images of good prints # Capture 50-100 images of failures # Label each as good/bad

- Train using Google Teachable Machine:

- Upload images at teachablemachine.withgoogle.com

- Export as TensorFlow Lite model

- Replace CV detection with model inference

- Requires:

- Pi 4 (2GB minimum, 4GB recommended)

tflite-runtimelibrary- ~500+ training images for good accuracy

Upgrade 2: Multi-Printer Monitoring

Monitor multiple printers from one Pi:

- Add USB cameras (one per printer)

- Use OpenCV to capture from multiple camera indices

- Run separate monitoring threads

- Centralized notification system

Upgrade 3: Filament Runout Detection

Add optical sensor (£5):

- Detects filament presence

- Complements visual monitoring

- Catches tangles and runouts

Upgrade 4: Enclosure Environment Monitoring

Add DHT22 sensor (£3):

- Monitor temperature and humidity

- Log environmental conditions per print

- Correlate failures with temperature swings

Community & Support

Share Your Build

We’d love to see your results!

- Reddit: Post in r/3Dprinting, r/raspberry_pi, r/octoprint

- Discord: Prusa, Ender, Voron communities

- GitHub: Fork and improve the code (link coming soon)

- YouTube: Tutorial video of your setup

Tag: @detectdefects on social media

Get Help

Having issues?

- Check Troubleshooting section above

- Review your captures/ images - are density values sensible?

- Test with LED ring light (lighting is CRITICAL)

- Ask in comments below or email: james@detectdefects.com

Contribute

Want to improve this project?

- Add support for different notification services

- Improve detection algorithms

- Create better mounting designs (share STLs!)

- Write tutorials for specific printer models

Conclusion

For £45-65, you can build a system that:

- ✅ Catches 75-85% of print failures before they become disasters

- ✅ Enables truly unattended overnight printing

- ✅ Pays for itself in 2-3 saved prints

- ✅ Teaches you computer vision fundamentals

Key Takeaways

- CV vs AI: This uses computer vision (edge detection), not AI/ML

- Lighting is critical: LED ring light is NOT optional for reliable detection

- Realistic expectations: 75-85% accuracy for obvious failures (spaghetti)

- False positives: Complex infills may trigger false alarms - adjust threshold

- Best for: Catching expensive disasters (spaghetti) early

What You’ve Learned

- ✅ OpenCV basics (edge detection, image processing)

- ✅ Raspberry Pi camera interfacing with Picamera2

- ✅ RESTful API integration (OctoPrint)

- ✅ Real-time monitoring system design

- ✅ Practical computer vision application

More CV Projects

Want to learn more computer vision?

- Detect Scratches with OpenCV - Surface inspection techniques

- Jetson Nano Defect Detection - GPU-accelerated CV

- YOLOv8 for Manufacturing - Deep learning approach

Questions? Drop a comment below or email james@detectdefects.com

Happy printing! 🎉🖨️

Last updated: December 15, 2025 Tested on: Raspberry Pi Zero 2 W and Pi 4 (2GB) Raspberry Pi OS: Bookworm (December 2023 release)

Discussion